Meta’s launch of Meta Compute is easy to read as just another big tech company investing in AI infrastructure. But that would miss the bigger shift happening underneath the cloud market.

For most of the last decade, cloud computing meant one thing: large, horizontal platforms like AWS, Azure, and GCP offering an ever-growing suite of enterprise services—compute, storage, databases, networking, security, analytics, and more. The value proposition was convenience and breadth.

AI is breaking that model.

The Rise of AI-Native Cloud Players

Over the past few years, a new category of cloud companies has emerged. Players like CoreWeave and Nebius aren’t trying to be everything to everyone. They are narrowly focused on one thing: AI compute, backed by massive fleets of GPUs.

Their pitch is simple:

- Faster access to GPUs

- Better performance for training and inference

- Pricing and infrastructure designed specifically for AI workloads

No legacy baggage. No sprawling enterprise service catalogs. Just compute that works exceptionally well for AI.

This focus has allowed them to grow quickly in a market where GPU scarcity and performance matter more than feature completeness.

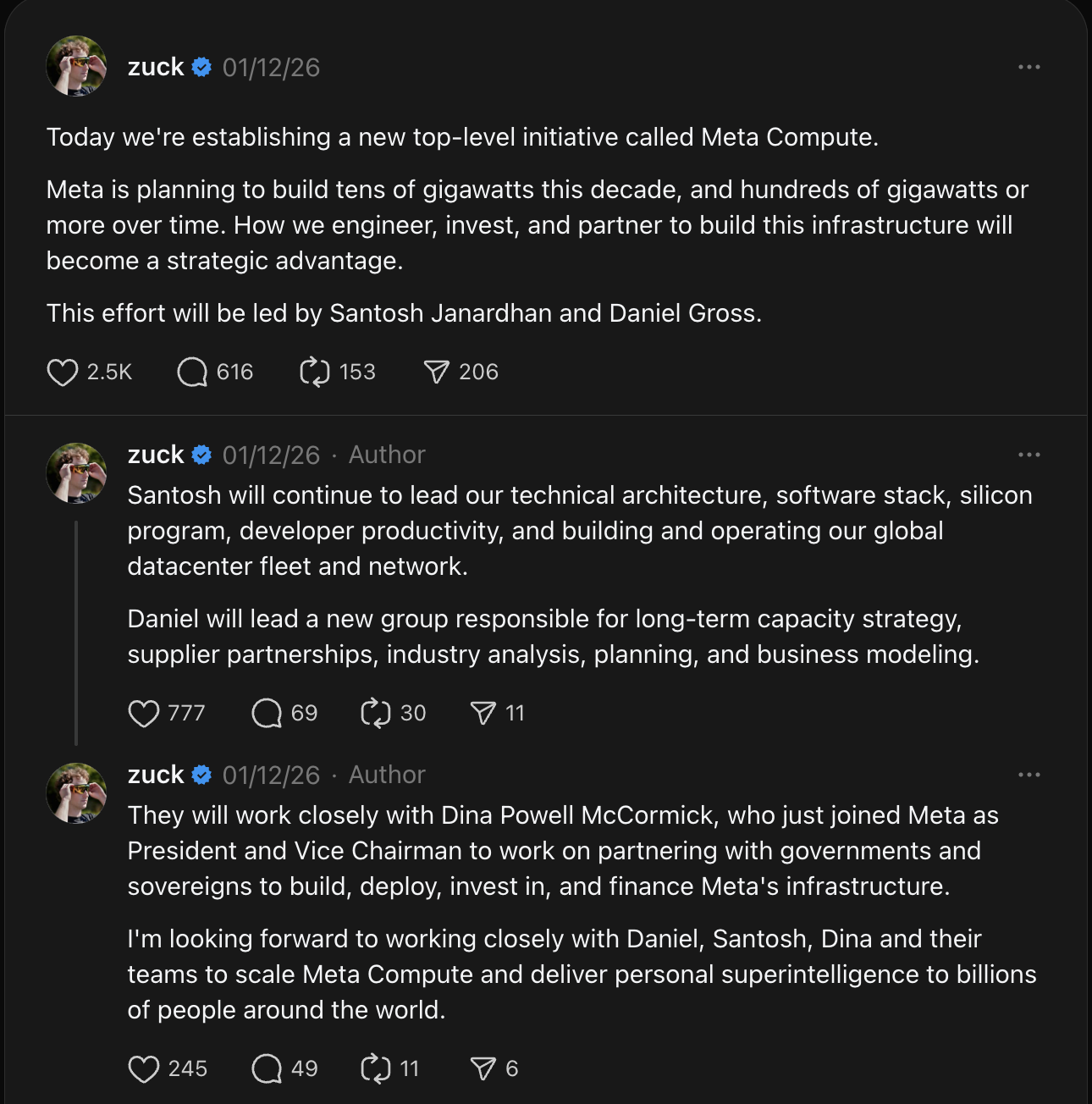

Where Meta Fits In

Meta Compute clearly starts in this AI-native camp.

Meta has some of the largest internal AI workloads in the world—recommendation systems, ads optimization, content ranking, generative AI models, and now consumer-facing AI products. Building bespoke infrastructure optimized for these workloads is table stakes.

But Meta isn’t just building “enough” infrastructure. It’s building at hyperscale, with deep vertical integration across hardware, networking, software, and AI frameworks.

That’s the key detail.

From Internal Infrastructure to External Platform

History suggests that when a company builds infrastructure at this scale, it rarely stays internal forever.

AWS began as internal infrastructure for Amazon retail. Google’s internal systems eventually shaped GCP. Even Meta’s own open-sourcing of tools like React and PyTorch followed a similar pattern: solve internal problems first, then externalize.

Once Meta controls:

- Large-scale GPU supply

- AI-optimized data centers

- Software and orchestration tuned for AI workloads

- Continuous internal demand that amortizes costs

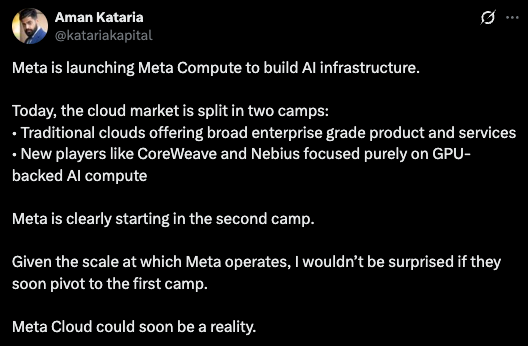

The leap from “internal compute” to “select external customers” — and eventually a broader cloud offering — becomes surprisingly small. Following tweet captures my point of view perfectly:

A future Meta Cloud wouldn’t need to compete head-on with AWS on enterprise databases or ERP workloads. It could start narrow: best-in-class AI compute for training and inference, exactly where demand is exploding.

Why This Matters

AI is changing the economics of cloud computing.

In the next decade, the primary cloud moat may no longer be the number of services offered, but:

- Access to scarce compute

- Efficiency at scale

- Performance per dollar for AI workloads

In that world, companies that own AI-native infrastructure have disproportionate leverage.

Meta Compute is an early signal of that shift.

Get freshly brewed hot takes on Product and Investing directly to your inbox!

Leave a Reply