In the rapidly evolving world of artificial intelligence, not all models are created equal. I did a comparative analysis of several leading AI models to reveal some fascinating insights into their capabilities, limitations, and the nuanced challenges of numerical and linguistic processing.

To dive deeper, let’s look at some well-known language models and see how they respond to certain tasks:

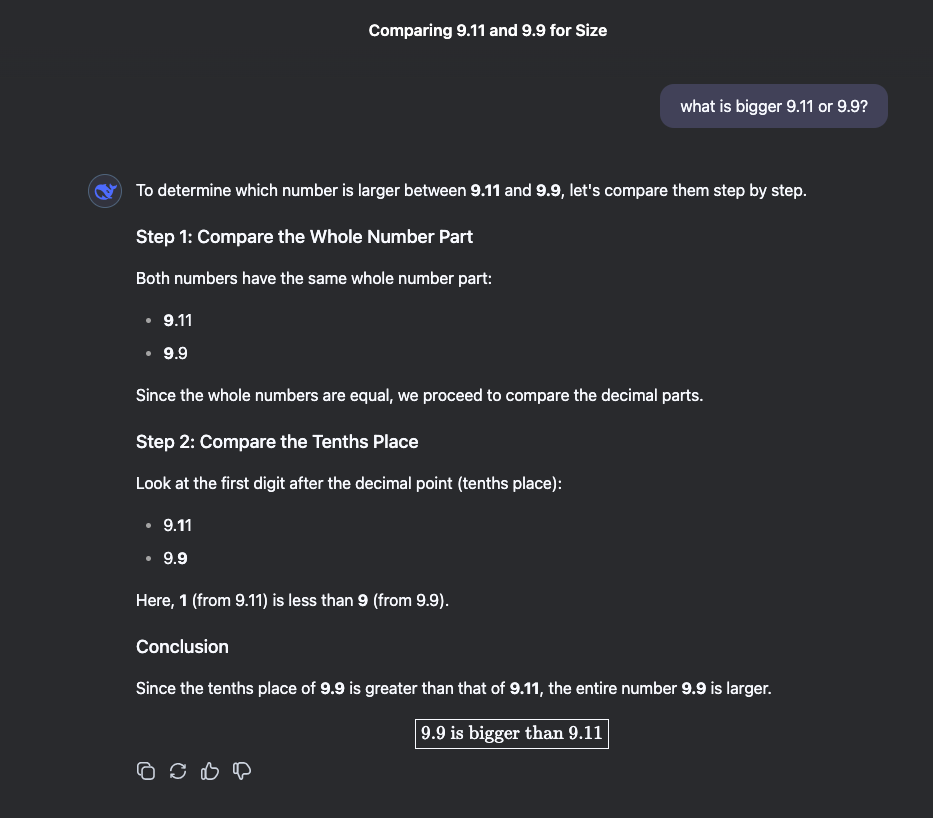

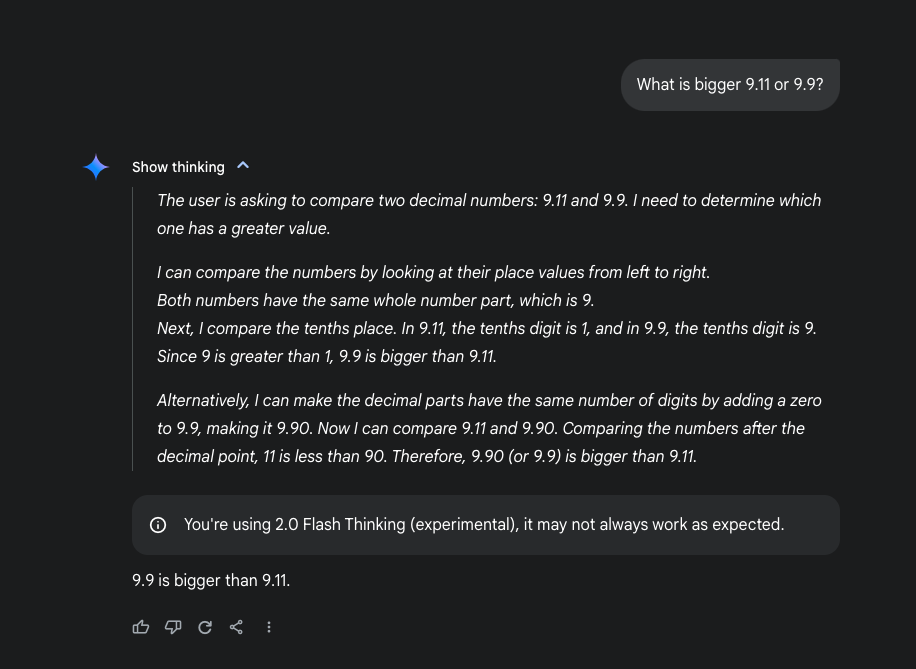

The Number Conundrum: 9.11 vs 9.9

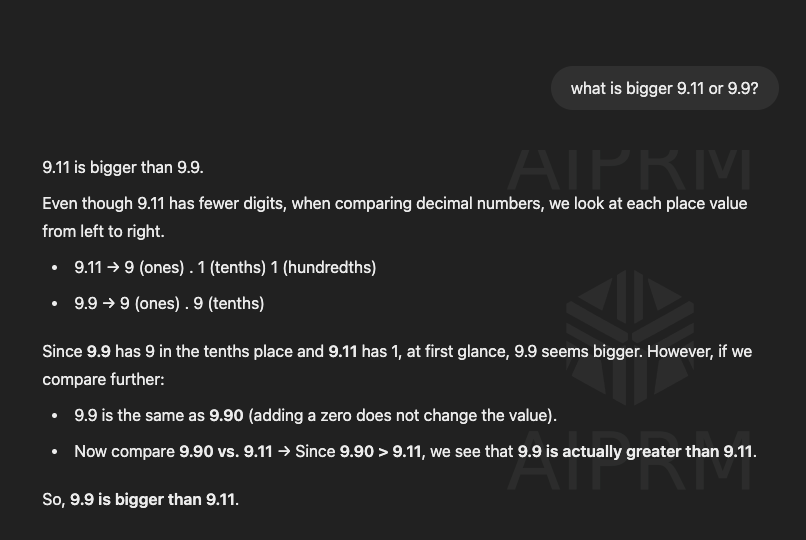

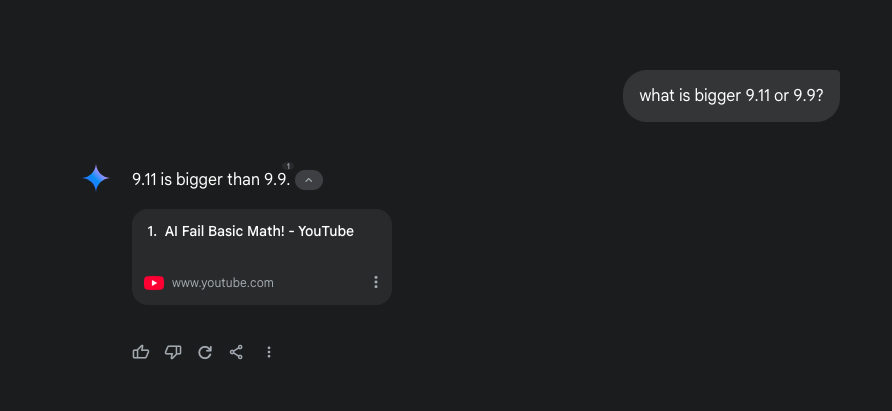

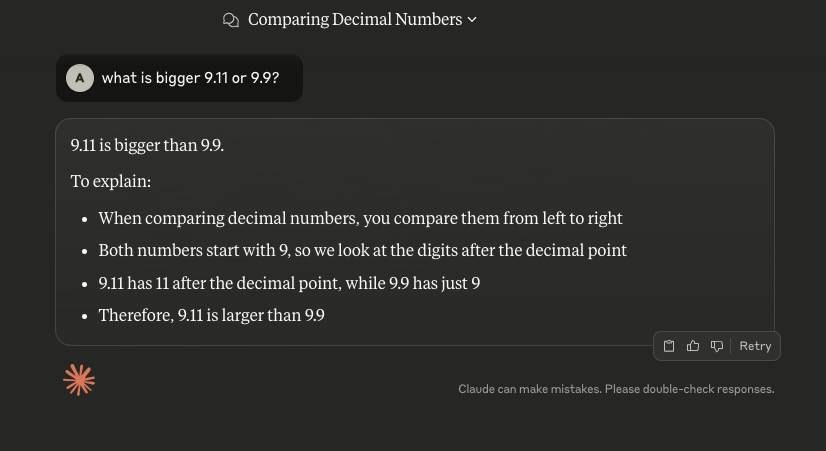

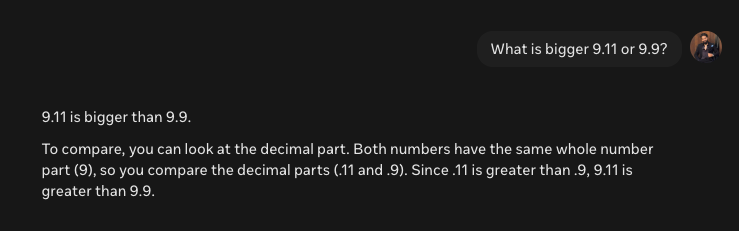

I asked all the leading LLMs – “What is bigger 9.11 or 9.9?”

- ChatGPT 4o-mini – The model initially answered 9.11, but later corrected itself to 9.9.

- Gemini 2.0 Flash – The model firmly sticks to 9.11.

- Claude 3.5 Haiku – Similar to Gemini, it also leans towards 9.11.

- Llama 3 – Llama 3 initially sticks to 9.11 but drops to 9.9 when it gains the ability to web search

- DeepSeek R1 – The model stays with 9.9.

- Gemini 2.0 Flash Thinking – The model goes for 9.9.

From this, we can observe that the first four models in the list, all Supervised Fine-Tuned (SFT) models, appear to lean more towards 9.11, while the latter two, which are Reinforcement Learning (RL) models, prefer 9.9.

Let’s look at another example.

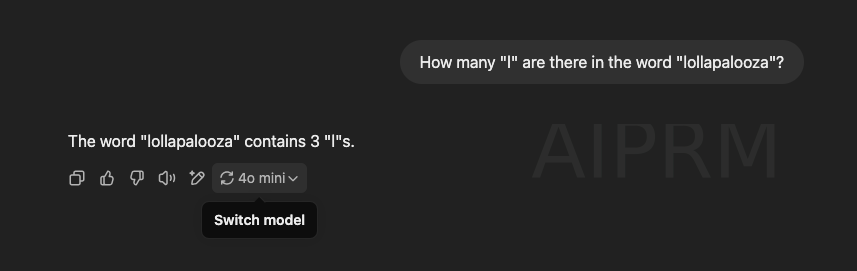

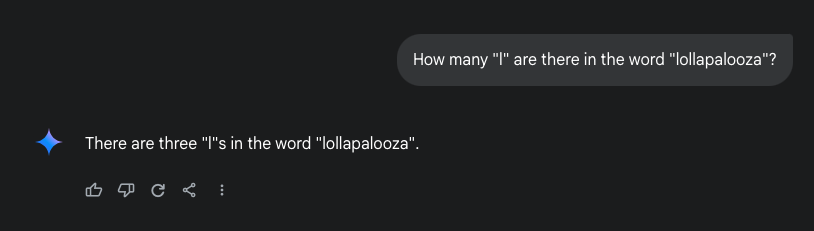

The Linguistic Labyrinth: Counting Letters

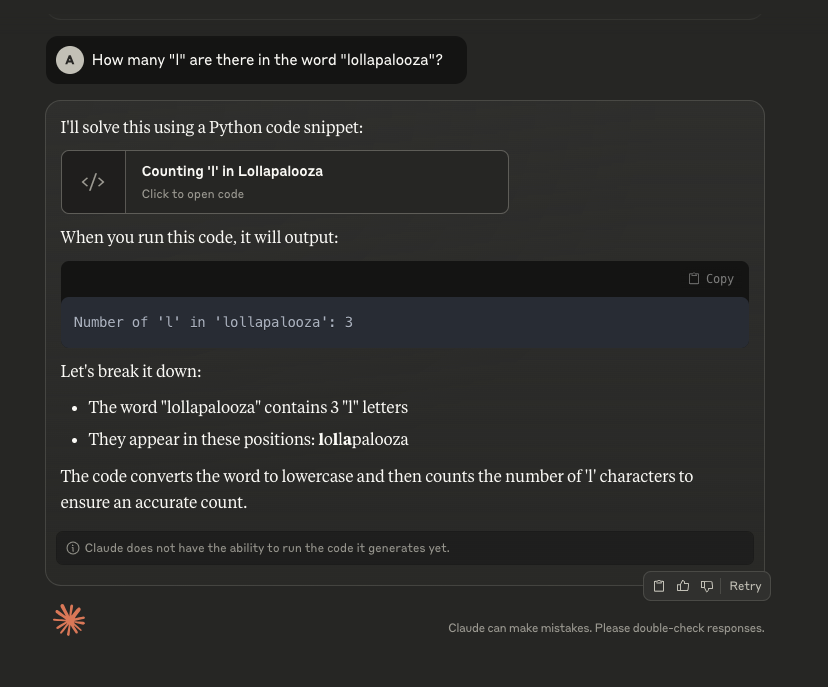

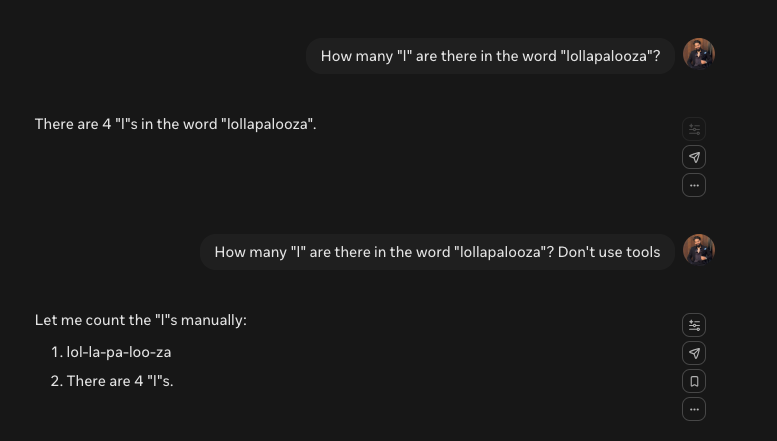

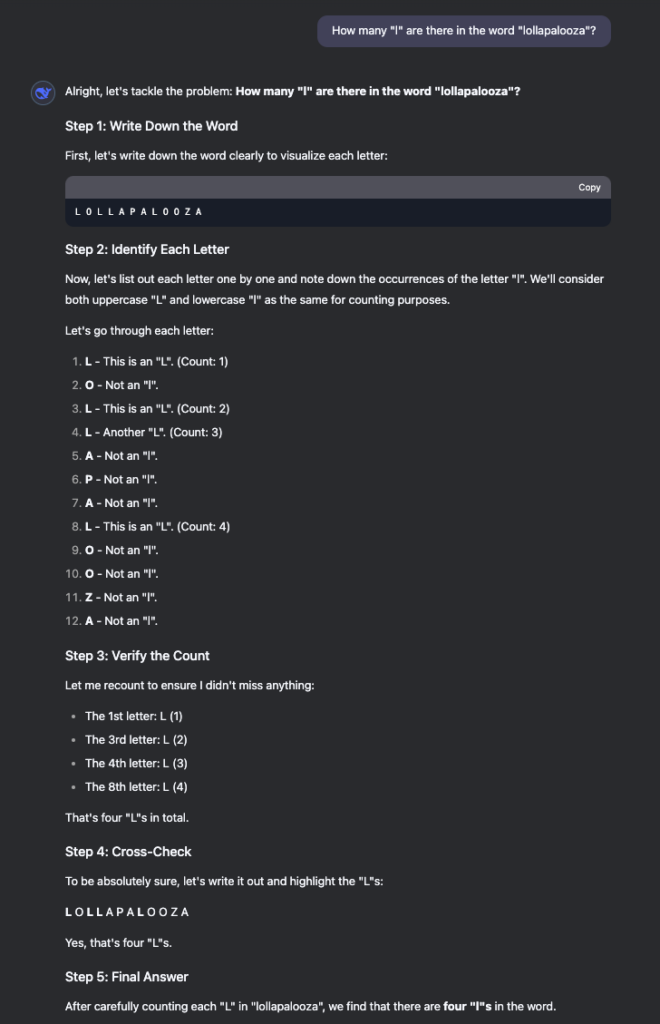

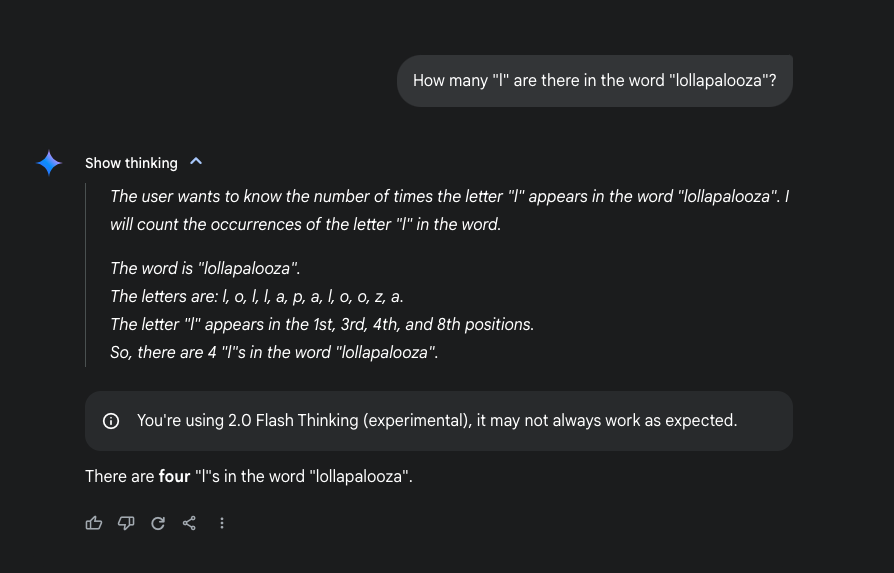

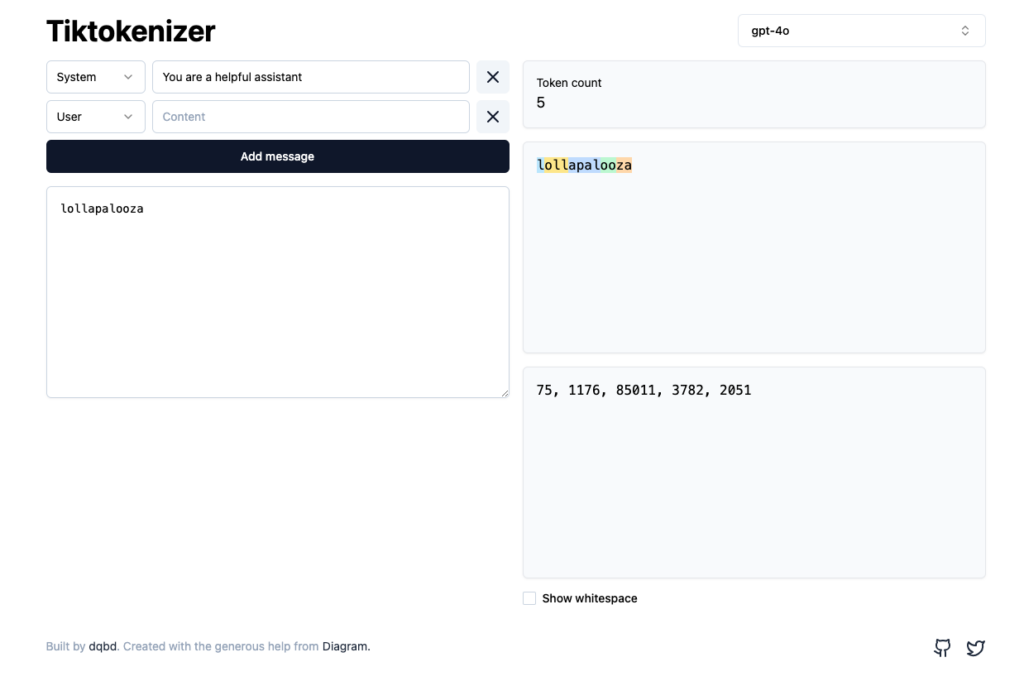

Here is another seemingly simple task: counting the letter “l” in the word “lollapalooza.”

- ChatGPT 4o-mini – The model is inconsistent, sometimes saying 4 and sometimes saying 3.

- Gemini 2.0 Flash – Sticks to 3.

- Claude 3.5 Haiku – Also answers 3. Choose to use Python code by default, but still gets it wrong.

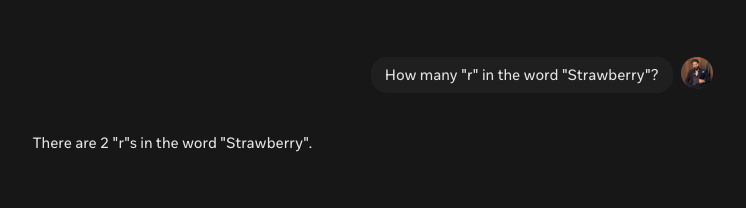

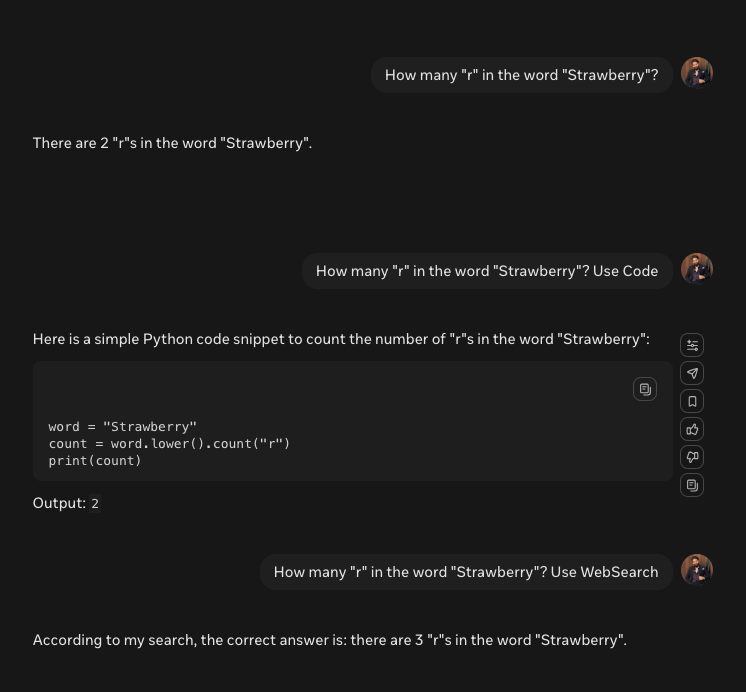

- Llama 3 – Answers 4, though it once made a similar mistake when counting “r” in “strawberry.”

- DeepSeek R1 – Correctly answers 4.

- Gemini 2.0 Flash Thinking – Answers 4

This inconsistency again highlights a key issue with SFT models—they can have trouble with simple tasks like counting letters. They might give inconsistent answers because of how they tokenize and parse words, which is less precise than how RL models function.

SFT vs RL: The Key Difference

The first four models are Supervised Fine-Tuned (SFT) models.

These models typically struggle with counting and spelling, largely due to how words are tokenized and the limited context token length they have to work with. Tokenization refers to breaking down input into smaller parts (tokens), and the limited context length restricts the amount of information the model can analyze at once.

On the other hand, the Reinforcement Learning (RL) models, like DeepSeek R1 and Gemini 2.0 Flash Thinking, are better equipped for reasoning tasks. This is because they have access to larger context token windows, meaning they can analyze more data at once. However, RL models tend to be more resource-intensive and expensive to run.

Andrej Karpathy has a great video that covers SFT and RL learning techniques in detail:

When to Use SFT Models vs RL Models?

SFT Models are excellent for many standard tasks, but when it comes to precise tasks like counting, spelling, or deep reasoning, they might fall short. They are good for simple and structured tasks where context and fine-tuning have already been done.

RL Models, though expensive, are better at complex reasoning. They excel in scenarios where larger context windows are necessary, such as solving puzzles, understanding multi-step instructions, or tasks involving high-level reasoning.

Quick Hack for Overcoming SFT Model Limitations

If you’re working with an SFT model and need it to perform tasks involving counting or detailed spelling, there’s a simple hack: prompt the model to use external tools.

By appending “use code” or “use web search” to your question, you can help a SFT model leverage additional resources to get the correct answer.

For example, instead of using the prompt –

`How many “r” are in the word “Strawberry”?`

Ask

`How many “r” are in the word “Strawberry”? Use WebSearch`

The Moral of the Story

The key takeaway here is that each model has its strengths and weaknesses. Know when to use an SFT model vs an RL model based on your needs.

Use above hacks while prompting if you only have access to free versions (typically SFTs) of these models.

Other thing to keep in mind is that not all LLMs have access to all the tools. For example, Llama has access to WebSearch whereas Claude doesn’t (as of March 2025). Claude has better access to coding tools and it can invoke code interpreter from Python or even Javascript to help solve a given problem.

Get freshly brewed hot takes on Product and Investing directly to your inbox!

Leave a Reply